2023 Research Highlights

Taking AI + Science mainstream

2023 was an exciting year - we launched many of our AI+Science models to real-world applications from weather forecasting to drug discovery and industrial design. I presented our work to the White House Science Council (PCAST) on AI+Science along with Demis Hassabis and Fei-Fei Li. Eric Schmidt also laid out his vision for AI+Science and featured many of our works in his editorial.

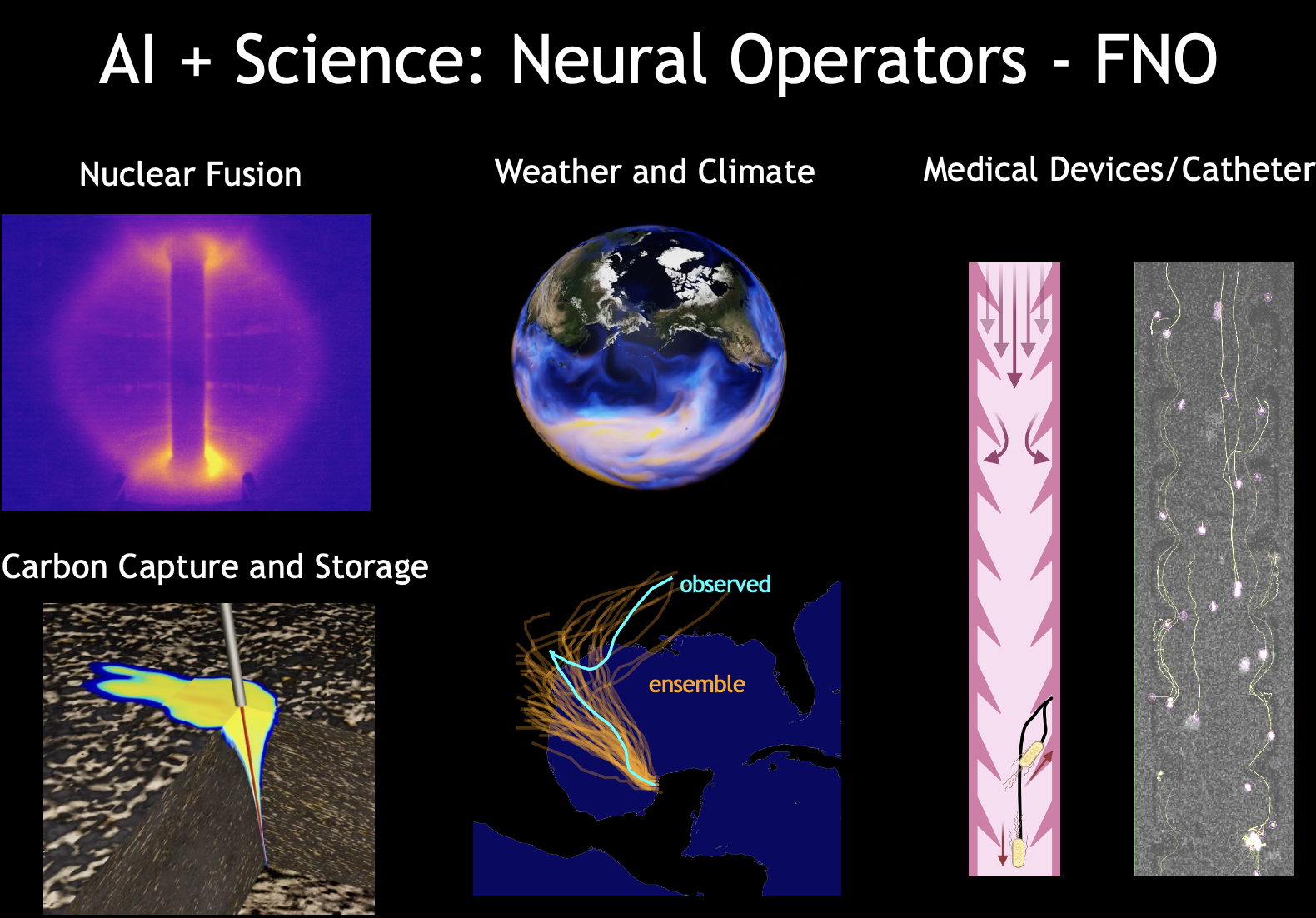

Neural operators as the backbone of AI + Science

While there have been many attempts to use large language models (LLM) for scientific problems with some success, there are inherent limitations. LLMs are only trained on text data which provides a high-level understanding but lacks the ability to internally simulate scientific processes such as fluid dynamics or material deformation. Further, image generation models only operate at a fixed resolution, which makes them unsuitable for capturing multi-scale processes that occur across resolutions. To overcome this, we created neural operators that work at all resolutions, and can do super-resolution without the need for retraining. Neural operators have replaced traditional simulations while being tens or even hundreds of thousands of times faster across multiple scientific domains, including weather forecasting (more below), Carbon Capture and Storage modeling, industry-scale automotive aerodynamics, and early detection of disruption in nuclear fusion. Further, we have been open-sourcing these frameworks to enable widespread democratization.

Neural Operators for Design

Neural operators make physical simulations faster, but not just that, they also create new designs to achieve certain physical goals, since they are differentiable, meaning they can be used to point out how a design needs to be changed to better achieve its physical goals. We used it to design a medical catheter, 3D printed and lab tested the design, and saw up to 2 orders of magnitude reduction in bacterial contamination, reducing the overall risk of catheter-associated infections. The main idea behind this is that asymmetric shapes can help prevent bacteria from swimming upstream through irregular boundary shapes that create vortices. However, finding the optimal shape is not straightforward. Our neural operator is not only fast, but also differentiable. We can directly improve the design and get an optimal design candidate with the best geometry to prevent bacteria from swimming upstream. We are now applying this to design in more complex and high-dimensional settings.

Neural Operators for Weather

Our AI FourCastNet model is built on neural operators. It is now providing real-time weather forecasts on ECMWF, the premier weather agency. It has been shown to be accurate while being tens of thousands of times faster than current forecasting systems. It even predicted the recent hurricane earlier than traditional models. Further, the speedup from AI models enables accurate risk assessment of extreme weather events such as hurricanes and heat waves since we can create many scenario forecasts for such chaotic events. FourCastNet was the first AI-based high-resolution weather model launched in 2021 when everyone thought it was impossible. It is exciting to see all other teams follow our lead and create a Cambrian revolution for AI + weather. Further, unlike others, our model and code are completely open-source and free of license and patent restrictions. Moreover, our model incorporates spherical geometry and is robust to drastic changes to input conditions, and has stable long-rollouts, unlike other models that are blind to the domain. Our work on FourCastNet also won the best paper award at PASC 2023

In 2024, I am excited to see what taking them to the Foundation Model scale that understands multiple domains of science and engineering will bring. There are already early sparks of success, and we will soon have more to share. Stay tuned!

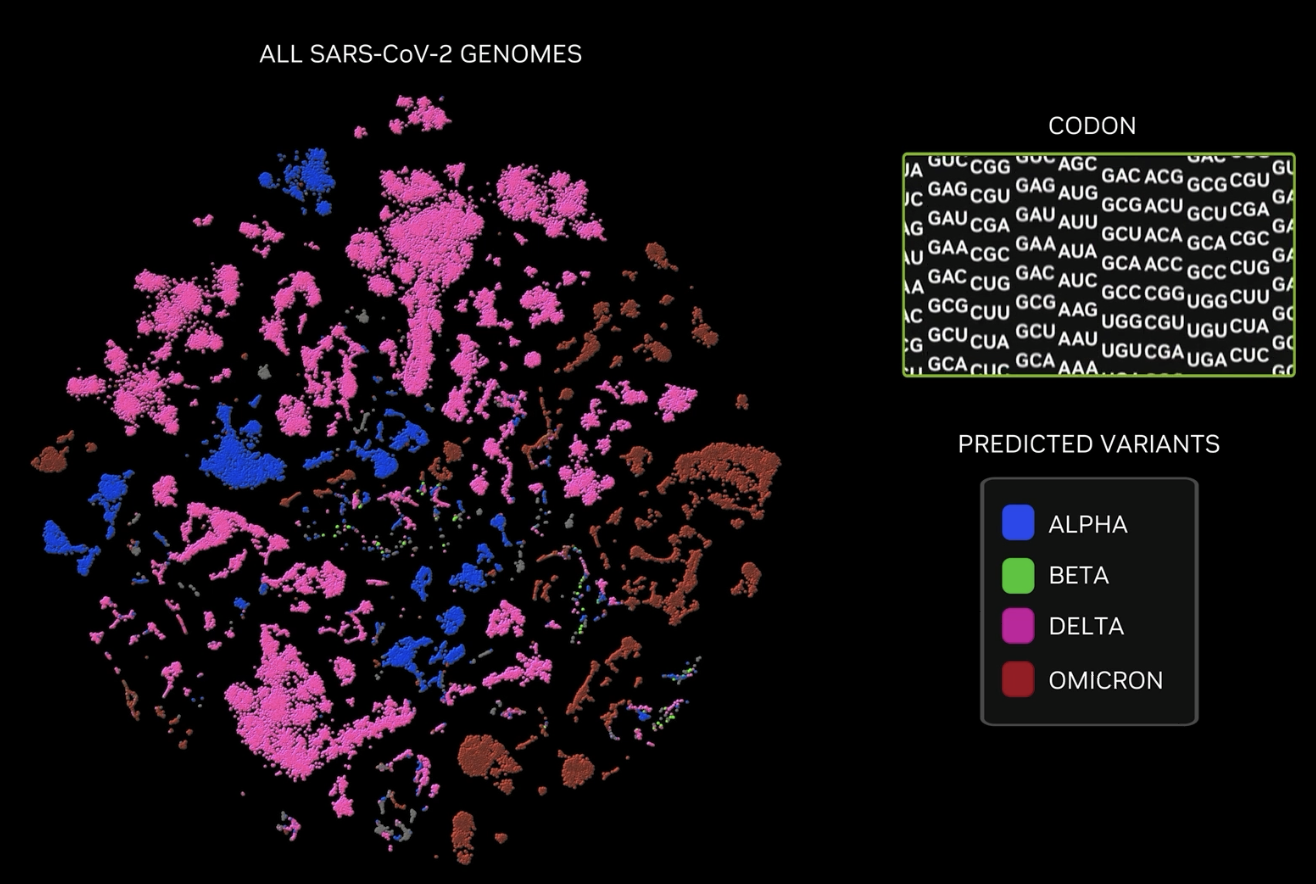

Other developments in AI+Science

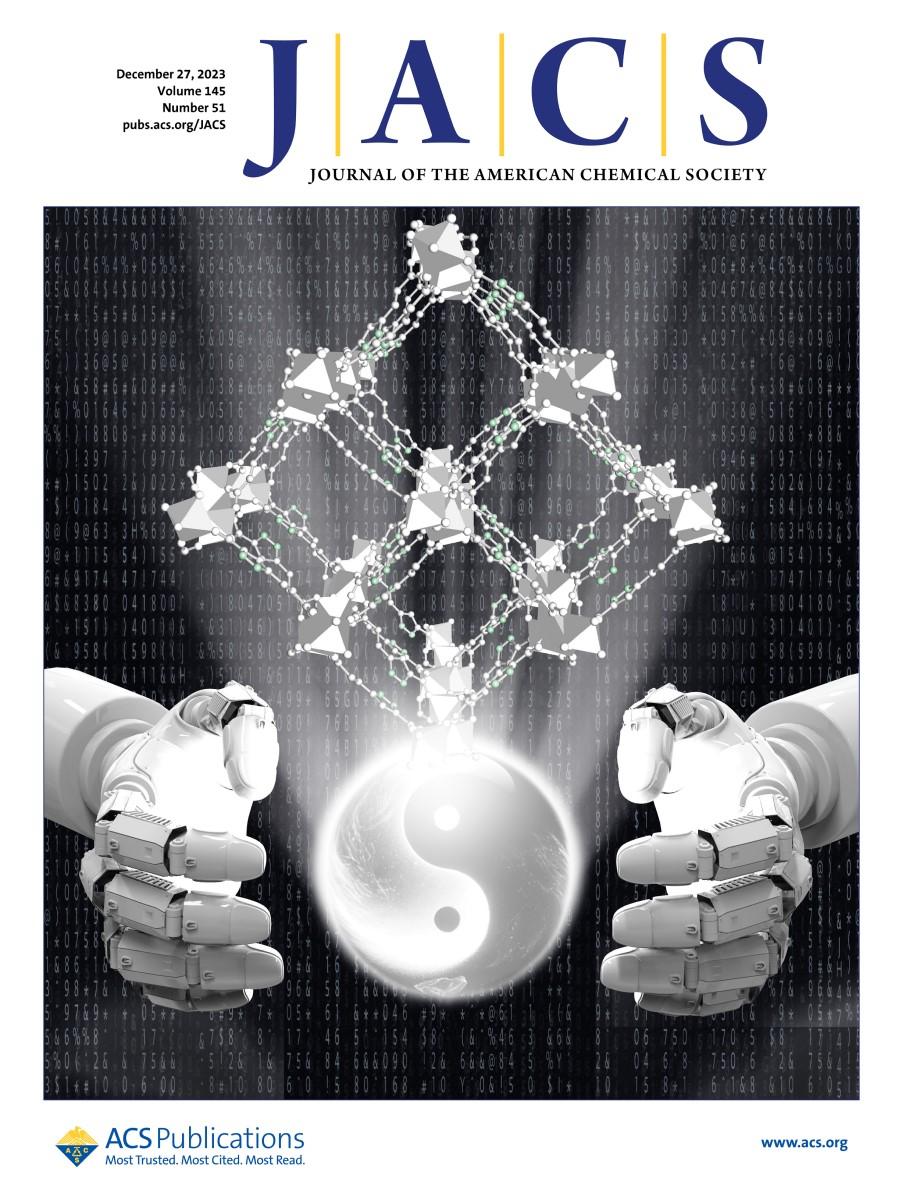

In 2023, we made huge strides in building foundation models for biology and chemistry by learning representations at different levels. Building on our genome-scale language model, where we trained on a large biobank of viral and bacterial genomes, we were able to predict the latest variants of coronavirus that emerged. In addition, we used the model to design new proteins by adding bio-physical reward functions that are analogous to the RLHF framework in LLMs. We learned multi-modal representations that combine text data with molecular and protein structural information to enable strong generalization for a variety of tasks, such as molecular editing with text instructions. We also used LLMs to propose MOF linker designs by mutating and modifying the existing linker structures. Metal–organic framework (MOF) molecules have widespread applications, including for direct air capture (featured on the cover of Journal of the American Chemical Society). In addition, we constructed vision foundation models for surgery that can do multiple tasks including gesture recognition and assessing the skills of surgeons (featured on the cover of Nature Biomedical Engineering).

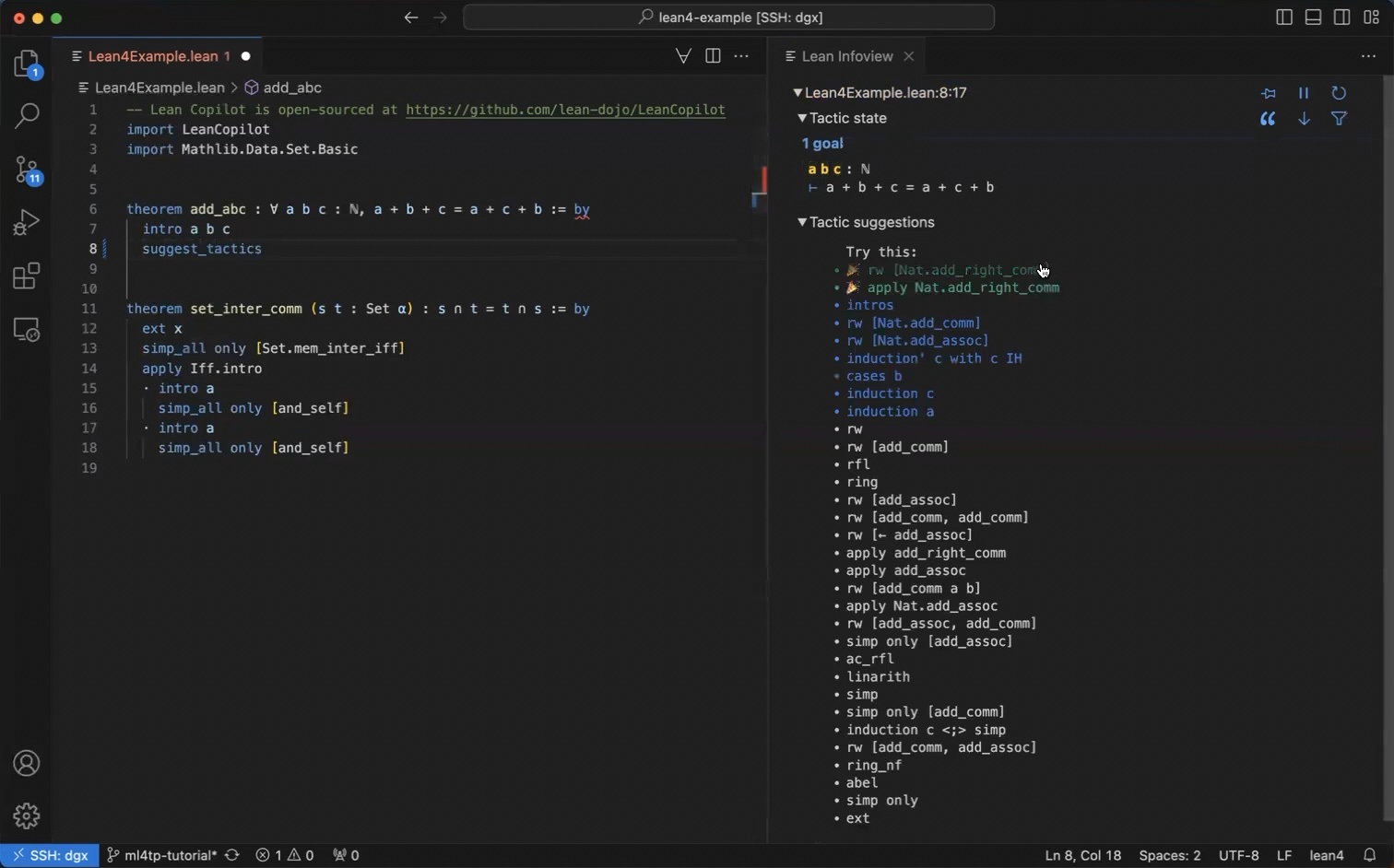

AI + Math: Removing hallucination in language models for theorem proving

Current LLMs are not reliable for math and reasoning tasks such as theorem proving since they make mistakes and suffer from hallucinations. Hence, until now, mathematical proofs have been mostly manually derived and require careful verification. Theorem provers like Lean can formally verify each step of the proof but are laborious for humans to write in Lean. Using LLMs to automate suggestions of Lean proof tactics speeds up proof synthesis significantly, and we can incorporate human inputs only when needed.

To accomplish this, we released the first open-source LLMs for proving theorems in Lean and developed it into a user-friendly Lean Co-pilot where humans and LLMs can collaboratively prove theorems with 100% accuracy. With Lean Co-pilot: (1) LLMs can suggest proof steps, search for proofs, and select useful lemmas from a large mathematical library. (2) Lean Copilot is easy to set up as a Lean package and works seamlessly within Lean’s VS Code workflow. (3) Users can use our built-in LLMs from LeanDojo or bring their own models that run either locally or on the cloud. Thus, Lean Copilot makes LLMs more accessible to Lean users, which we hope will initiate a positive feedback loop where proof automation leads to better data and ultimately improves LLMs on mathematics.

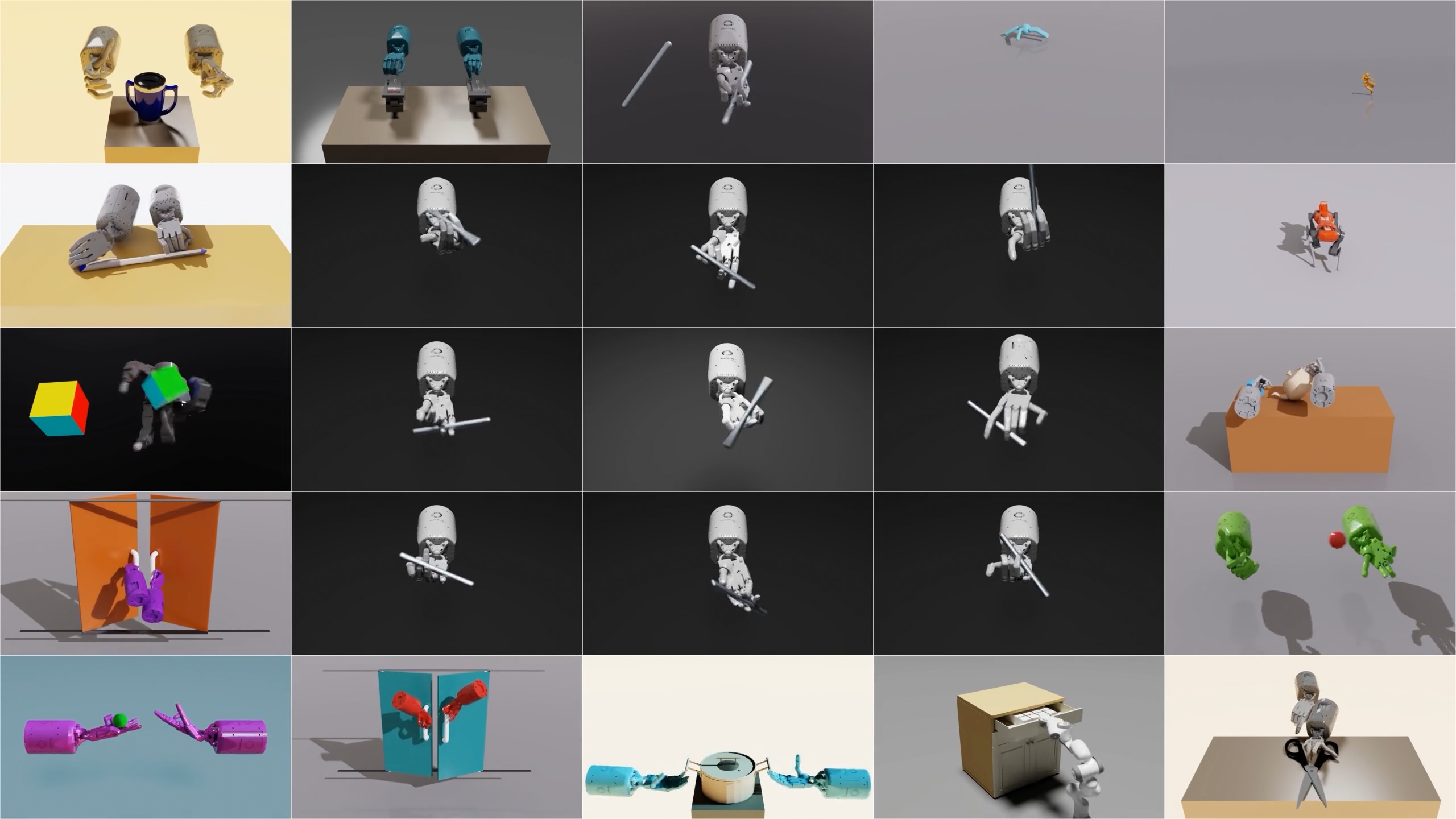

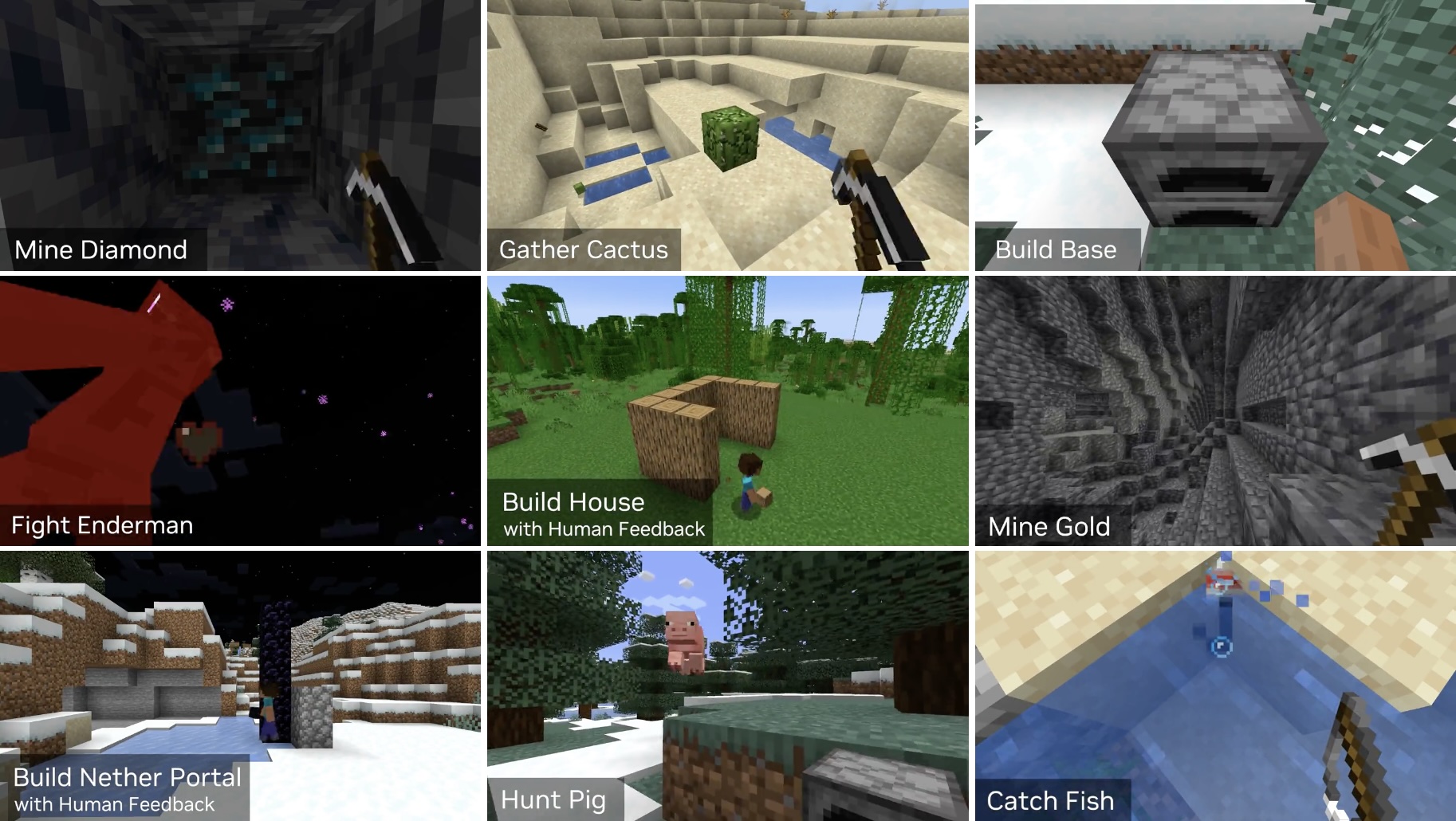

AI agents with language models

We created embodied agents that interact with LLMs to solve complex open-ended tasks in robotics and virtual environments such as Minecraft. It showed that in-context learning, without needing to fine-tune language models or do reinforcement learning, can provide strong performance for interactive learning in many domains.

Personal updates

I am privileged to have the support to do open-ended high-risk high-reward research in AI+Science by the Schmidt Futures AI 2050 senior fellowship and the Guggenheim fellowship. I also got to talk about it on Bloomberg and PBS. It was also an honor to attend the ACM fellow award ceremony this summer. All these honors are a reflection of the amazing team that I am privileged to be part of that includes students, researchers and collaborators.